Feature Selection is the most critical pre-processing activity in any machine learning process. It intends to select a subset of attributes or features that makes the most meaningful contribution to a machine learning activity. In order to understand it, let us consider a small example i.e. Predict the weight of students based on the past information about similar students, which is captured inside a ‘Student Weight’ data set. The data set has 04 features like Roll Number, Age, Height & Weight. Roll Number has no effect on the weight of the students, so we eliminate this feature. So now the new data set will be having only 03 features. This subset of the data set is expected to give better results than the full set.

Age

Height

Weight

The above data set is a reduced dataset. Before proceeding further, we should look at the fact why we have reduced the dimensionality of the above dataset OR what are the issues in High Dimensional Data?

High Dimensional refers to the high number of variables or attributes or features present in certain data sets, more so in the domains like DNA analysis, geographic information system (GIS), etc. It may have sometimes hundreds or thousands of dimensions which is not good from the machine learning aspect because it may be a big challenge for any ML algorithm to handle that. On the other hand, a high quantity of computational and a high amount of time will be required. Also, a model built on an extremely high number of features may be very difficult to understand. For these reasons, it is necessary to take a subset of the features instead of the full set. So we can deduce that the objectives of feature selection are:

Main Factors Affecting Feature Selection

a. Feature Relevance: In the case of supervised learning, the input data set (which is the training data set), has a class label attached. A model is inducted based on the training data set — so that the inducted model can assign class labels to new, unlabeled data. Each of the predictor variables, ie expected to contribute information to decide the value of the class label. In case of a variable is not contributing any information, it is said to be irrelevant. In case the information contribution for prediction is very little, the variable is said to be weakly relevant. The remaining variables, which make a significant contribution to the prediction task are said to be strongly relevant variables.

In the case of unsupervised learning, there is no training data set or labelled data. Grouping of similar data instances are done and the similarity of data instances are evaluated based on the value of different variables. Certain variables do not contribute any useful information for deciding the similarity of dissimilar data instances. Hence, those variable makes no significant contribution to the grouping process. These variables are marked as irrelevant variables in the context of the unsupervised machine learning task.

We can understand the concept by taking a real-world example: At the start of the article, we took a random dataset of the student. In that, Roll Number doesn’t contribute any significant information in predicting what the Weight of a student would be. Similarly, if we are trying to group together students with similar academic capabilities, Roll No can really not contribute any information. So, in the context of grouping students with similar academic merit, the variable Roll No is quite irrelevant. Any feature which is irrelevant in the context of a machine learning task is a candidate for rejection when we are selecting a subset of features.

b. Feature Redundancy: A feature may contribute to information that is similar to the information contributed by one or more features. For example, in the Student Data-set, both the features Age & Height contribute similar information. This is because, with an increase in age, weight is expected to increase. Similarly, with the increase in Height also weight is expected to increase. So, in context to that problem, Age and Height contribute similar information. In other words, irrespective of whether the feature Height is present or not, the learning model will give the same results. In this kind of situation where one feature is similar to another feature, the feature is said to be potentially redundant in the context of a machine learning problem.

All features having potential redundancy are candidates for rejection in the final feature subset. Only a few representative features out of a set of potentially redundant features are considered for being a part of the final feature subset. So in short, the main objective of feature selection is to remove all features which are irrelevant and take a representative subset of the features which are potentially redundant. This leads to a meaningful feature subset in the context of a specific learning task.

The measure of feature relevance and redundancy

a. Measures of Feature Relevance: In the case of supervised learning, mutual information is considered as a good measure of information contribution of a feature to decide the value of the class label. That is why it is a good indicator of the relevance of a feature with respect to the class variable. The higher the value of mutual information of a feature, the more relevant is that feature. Mutual information can be calculated as follows:

![]()

![]()

Where, marginal entropy of the class, (

![]()

Marginal entropy of the feature ‘x’,

And K = number of classes, C = class variable, f = feature set that take discrete values. In the case of unsupervised learning, there is no class variable. Hence, feature-to-class mutual information cannot be used to measure the information contribution of the features. In the case of unsupervised learning, the entropy of the set of features without one feature at a time is calculated for all features. Then the features are ranked in descending order of information gain from a feature and the top percentage (value of beta is a design parameter of the algorithm) of features are selected as relevant features. The entropy of a feature f is calculated using Shannon’s formula below:

![]()

is used only for features that take the discrete values. For continuous features, it should be replaced by discretization performed first to estimate the probabilities p(f=x).

b. Measures of Feature Redundancy: There are multiple measures of similarity of information contribution, the main ones are:

1. Correlation Based Similarity Measure

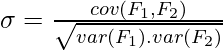

Correlation is a measure of linear dependency between two random variables. Pearson’s product correlation coefficient is one of the most popular and accepted measures correlation between two random variables. For two random feature variables F1 and F2 , the Pearson coefficient is defined as:

![]()

![]() where

where ![]()

![]() where

where ![]()

Correlation value ranges between +1 and -1. A correlation of 1 (+/-) indicates perfect correlation. In case the correlation is zero, then the features seem to have no linear relationship. Generally for all feature selection problems, a threshold value is adopted to decide whether two features have adequate similarity or not.

2. Distance-Based Similarity Measure

The most common distance measure is the Euclidean distance, which, between two features F1 and F2 are calculated as:

![]()

Where the features represent an n-dimensional dataset. Let us consider that the dataset has two features, Subjects (F1) and marks (F2) under consideration. The Euclidean distance between the two features will be calculated like this: